Four Deep Learning Papers to Read in August 2021

Original Source Here

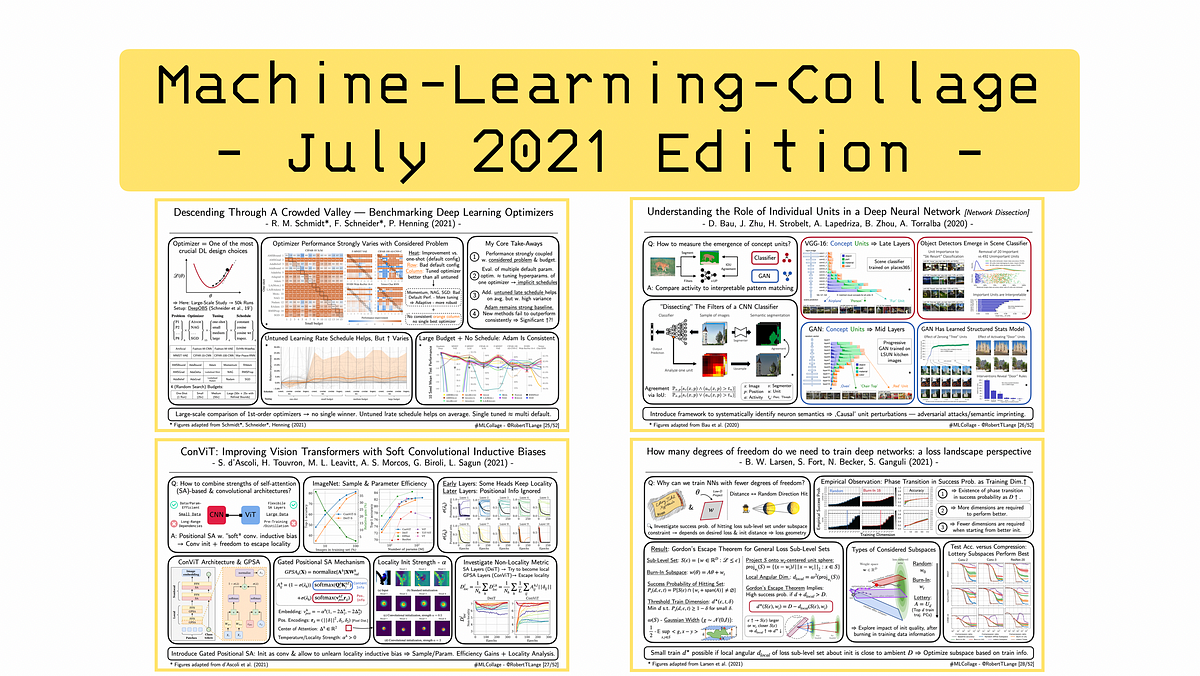

Four Deep Learning Papers to Read in August 2021

From Optimizer Benchmarks to Network Dissection, Vision Transformers & Lottery Subspaces

Welcome to the August edition of the ‚Machine-Learning-Collage‘ series, where I provide an overview of the different Deep Learning research streams. So what is a ML collage? Simply put, I draft one-slide visual summaries of one of my favourite recent papers. Every single week. At the end of the month all of the resulting visual collages are collected in a summary blog post. Thereby, I hope to give you a visual and intuitive deep dive into some of the coolest trends. So without further ado: Here are my four favourite papers that I read in July 2021 and why I believe them to be important for the future of Deep Learning.

‘Descending through a Crowded Valley — Benchmarking Deep Learning Optimizers’

Authors: Schmidt*, Schneider,* Henning (2021) | 📝 Paper | 🗣 Talk | 🤖 Code

One Paragraph Summary: Tuning optimizers is a fundamental ingredient to every Deep Learning-based project. There exist many heuristics such as the infamous starting point for the learning rate, 3e-04 (a.k.a. the Karpathy constant). But can we go beyond anecdotal stories and provide general insights into the performance of optimizers across task spaces? In their recent ICML paper, Schmidt et al. (2021) investigate this question by running a large-scale benchmark with over 50,000 training runs. They compare 15 different first-order optimizers for different tuning budget, training problems and learning rate schedules. While their results do not identify a clear winner, they still provide some insights:

- Unsurprisingly, the performance of different optimizers strongly depends on the considered problem and tuning budget.

- Evaluating the default hyperparameters for multiple optimisers is roughly as successful as tuning the hyperparameters of a single one. This may be due to an implicit learning rate schedule of adaptive methods (e.g. Adam).

- On average adding an untuned learning rate schedule improves the performance, but there is quite a large variance associated.

- Adam remains a strong baseline. Newer methods fail to consistently outperform it, highlighting the potential of specialized optimizers.

Unfortunately, the benchmark does not include any Deep RL or GAN-style training tasks. Nonetheless, such empirical investigations help practitioners who struggle with limited compute.

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.io/2021/08/02/four-deep-learning-papers-to-read-in-august-2021/