Deep Learning for Twitter Personality Inference

Original Source Here

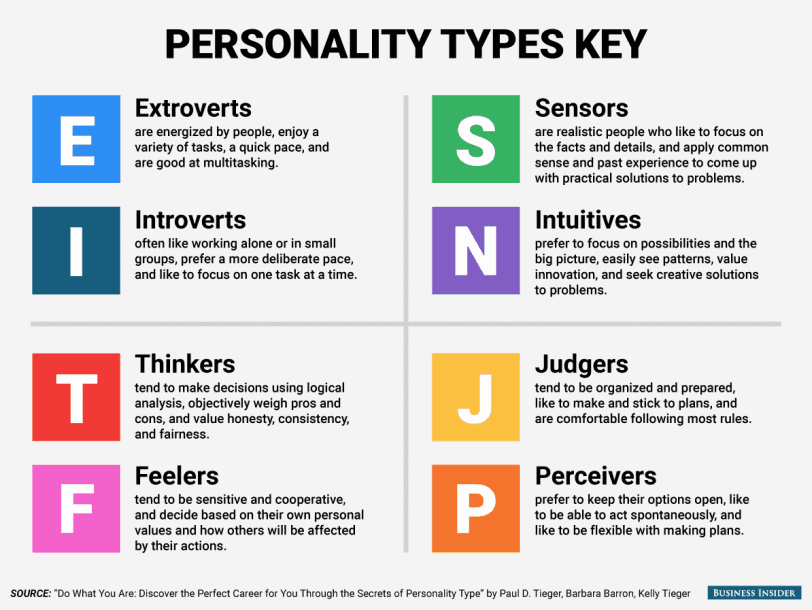

While this sample would ideally be larger, the dataset cannot be increased without unbalancing the classes than they already are in the dataset. This is because some (self-declared) personality types only number about 100 profiles in total. ISTPs were the least represented amongst all personalities — a finding consistent with theory, as ISTPs are described to be outdoor-oriented people who enjoy physical activity and working with tools. It wouldn’t be surprising if they don’t prefer the endlessly roundabout conversations on politics and abstract tech that Twitter is famous for. In general, I found that Intuitives (N) are significantly more prominent than Sensing(S) preference profiles. While I could not get an accurate estimate of this disparity in the Twitter population, the ratio is at least ~2:1 based on my sample. Many Intuitive types reached the 300 profile limit I specified; we can be sure that the 2:1 ratio is definitely underestimated. The national population estimate is about 1:2 — a reversal of the pattern we see on Twitter. This disparity is expected as the Twitter platform prioritizes abstract, idea-based themes — selecting for Intuitives more than Sensors (who prioritize concrete information perceived directly by the senses).

Data quality is key

For applied data science projects, the quality of the dataset is critical. One of the primary reasons why I chose to forgo some off-the-shelf MBTI datasets from Kaggle is because they are based on personality forum data (either from Reddit or some other website). As I mentioned before, models built on narrowly thematically-focussed text are not going to generalize well to real-world engagement, either on other social media websites or face-to-face communication. The Twitterverse, on the other hand, is massive — users are held to no thematic restrictions and discourse mirrors free-form communication much better.

Twitter profiles contain a lot of information — they hold user self-descriptions and self-expressions (bios and profile photos), active text-based communication (statuses), and user information consumption data (liked tweets). While there’s a whole lot more other information contained by the social network that users follow and are followed by, we only look at the former set of data sources for the purposes of this project.

The drawback to Twitter data (which affects all self-labeled MBTI datasets) is that the labels are not going to be super accurate. Many personality types are seen as socially desirable over others and are consequently self-reported more often. Personality tests — even professional ones — contain a significant degree of test-retest error. Considering this, I estimate that roughly between 70–90% of labels in our dataset are accurate for any single dimension. Even if the labels are not perfect, our models should still do better than chance if the system is valid, though the maximum possible accuracy would be limited. Note these labels are perfectly valid as measures of self-reported personality — if we reframe our problem that way, our labels are 100% accurate.

Part 3: Investigating text

We take a multi-dimensional perspective on Twitter profiles — we look at 3 distinct text sources that provide us unique perspectives of the social engagement of each of our Twitter profiles.

- Biographies — Passive engagement

- Statuses — Creative engagement

- Liked tweets — Information consumption

Summary

- We can classify personality traits of Twitter profiles with an accuracy of 66% on average and up to 73% for the Intuitive/Sensing dimension.

- BERT — a deep-learning-based NLP model — performs the best.

- Naive Bayes performance not far behind, combined models could yield even greater performance.

Methods

Let’s dive into the first set of methods we use — using text features only. We apply our methods — Naive Bayes, BERT, and a word2vec-based neural network — separately for each of the three sources — bios, statuses, and liked tweets. We split the data in a 90:10 for training and testing. We sample further to ensure that our classes are balanced both for training and testing (the latter is not necessary but this way the micro- and macro-average accuracy will be the same). So we end up with ~310–370 profiles in our test sets and 9x that data in our train sets. The variation is because traits are not all equally unbalanced before we sample again to ensure balanced classes.

Naive Bayes: Naive Bayes is a simple probabilistic classifier that assumes independence in the features it’s fed. For our problem, we first preprocess the text (stemming, lemmatization, etc.) and then obtain tf-idf vector representations of the text. Tf-idf (term frequency inverse document frequency) is a popular feature extraction method based on token counts. Tf-idf weights the signal of tokens present in a document with their frequency of appearance within the document and normalizes the signal for tokens common across documents. These tf-idf vectors are fed into our Naive Bayes classifier.

Word2vec embeddings + Neural Network: For this method, we first take word2vec vectors of all the tokens in our text and then average them to produce a single 300-dimensional vector. We feed this into a custom neural net with a single hidden layer (of size 64) to classify the embeddings. If you’re familiar with deep-learning approaches to NLP, you might notice that this isn’t a very information-efficient method — we lose a lot of information when we average the token vectors. However, I added this method for diagnostic purposes — to better understand where the signal in the text was coming from. As w2v embeddings roughly reflect the semantic properties of tokens, the average of these tokens would give us a representation of the median semantic space occupied by user text. To better understand the relationship of this representation to personality traits, we include this method.

BERT: BERT is a Transformer-based NLP that represents the latest paradigm of deep-learning-based NLP architectures. It’s trained on bidirectional language modeling — the task of predicting a missing word in a sentence — and predicting whether two sentences are contiguous. BERT & closely related models power Google Search and the latest generation of language technologies.

For building our models, we use the PyTorch implementation of BERT (base) from Hugging Face. For tuning hyperparameters, the validation set is set as 8% of the training set. I also tried multiple seeds for the random train-test split to ensure that we don’t end up overfitting the hyperparameters. The final choices of hyperparameters varied across the trait being classified and the source of features (statuses, bios, or liked tweets). I used batch sizes between 4–8 and a learning rate of 1e-5. For biographies, the token limit was set to 64, and for statuses and liked tweets it was set to 512.

All text is first cleaned of URLs, mentions (‘@X’), and all instances of any MBTI label. I don’t remove hashtags as they might contain some semantically generalizable signal. BERT can use hashtag-based tokens as it uses the WordPiece tokenizer i.e. it can split tokens to retrieve subparts. ‘#SaveWater’ contains useful lexical information that can be inferred without necessarily understanding the specific context of the hashtag itself. This line of reasoning doesn’t hold for all hashtags though (such as those with proper nouns), but in the off chance it works for some hashtags, I would rather not remove any.

Text Source #1: Biographies

We first take a look at biographies — self-descriptions of your Twitter profile. I set a max token limit of 64 (no bio exceeds this) for inputs — so these aren’t very large features.

Why use bios? It doesn’t take a genius to figure out that how you describe yourself socially probably has strong relationships to personality traits.

You try.

Take the example below and try to guess my MBTI traits! Hint: does my bio indicate that I prefer abstract, theoretical themes (Intuitive — N) or concrete, viscerally grounded information (Sensing — S)? (I provide answers later.)

So how did our classifiers do?

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.io/2021/06/12/deep-learning-for-twitter-personality-inference/