Understanding Localization in Self-Driving Cars — and its Technology

Original Source Here

Understanding Localization in Self-Driving Cars — and its Technology

An Introduction to Self-Driving Cars (Part 3)

Previously, we discussed about why self-driving car is the future and how we got here today by looking at the history of transportation. When then dove into the backbone of self-driving software itself: how to build an HD mapping, in the second part of this Introduction to Self-Driving Cars series.

The high-definition mapping is critical in any driverless vehicle production since it enables accurate localization, helps with sensor perception and path planning, all to improve safety and comfort of the driver and riders. Without this map data, it is almost impossible for any automated driving systems to accurately localize and anticipate the road ahead.

What is localization?

“In theory, localization isn’t necessary, as long as the vehicle’s perception system can figure out everything in the environment. However, since it puts a lot of responsibility on the perception system, localization is used to greatly simplify the perception tasks.” — David Silver

By definition, localization is an ability for autonomous vehicles to know exactly where it is in the world.

With localization, we can pinpoint a vehicle’s location within less than 10 centimeters inside a map. This high level of accuracy enables a self-driving car to understand its surroundings and establish a sense of the road and lane structures. Precise localization allows a vehicle to detect when a lane is forking or merging, plan lane changes, and determine lane paths even when markings aren’t clear.

How does localization work?

A fundamental understanding of how localization works lies in the comparison between what the car’s sensors see and its actual location in the map. To simply put it step-by-step:

- Vehicle’s sensors measure the distance between ego (the vehicle itself) and static objects around it, like trees, walls, road signs, etc.

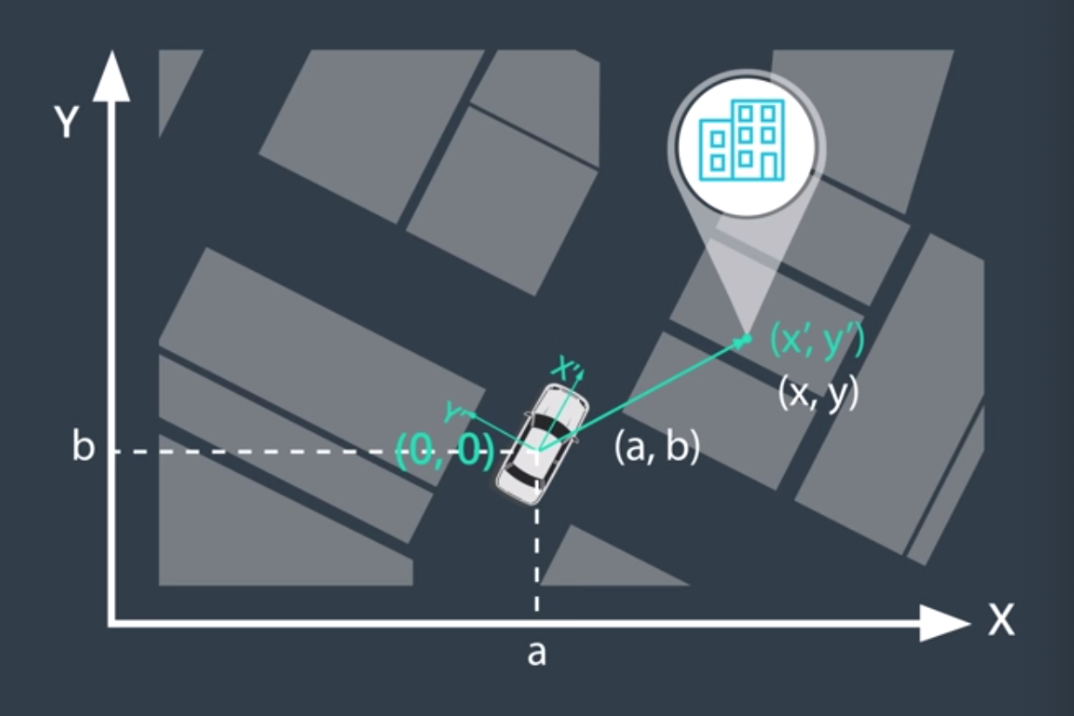

- Distances and directions to the static objects are measured in ego’s coordinate frame. Like the following image, the head of the car would always point forward, X’.

- Ego also compares semantic landmarks that its sensors identify, to the landmarks that are present in the HD maps. This comparison would require data transformation between sensors’ coordinate frame to maps’ coordinate frame, all within 10cm accuracy.

Localization makes precise positioning possible by matching objects and landmarks in the vehicle’s environment with features from HD maps so it can determine exactly where it is in real time.

Technology — how they work and their challenges

Components like radar, LiDAR and cameras are used to provide the distance to objects surrounding the vehicle. If the exact location of the surrounding objects is known, sensor integration from these hardwares will provide the absolute vehicle location with assistance from HD map.

Keywords: Global Navigation Satellite System (GNSS), Inertial Measurement Unit (IMU), and Light Detection And Ranging Sensor (LiDAR)

In addition to HD maps, we’ll take a look at a more detailed view at the technology behind localization, such as GNSS, IMU, LiDAR, and camera:

1. Global Navigation Satellite System (GNSS)

A widely used application of GNSS is a Global Positioning System, or what is widely known as GPS. GPS is a system of 30+ navigation satellites circling the Earth, that was initially launched by the U.S. government in 1973 with an aim to offer satellite-based navigation anywhere on earth. Based on data from NASA Space Place, these satellites — approximately 20,000 kilometers away from the earth’s surface — constantly send out signals which are then listened by a GPS receiver in our phone. Once the receiver calculates its distance from three or more GPS satellites (with one other satellite for validation), it can figure out where we are.

To compute the time it takes for signals from a satellite to travel to GPS receiver: time = distance / speed of light (c=3*1⁰⁸ m/s)

Now think of cars with the same GPS receiver. Would it be safe to localize its position just based on GPS? GPS alone isn’t enough, as it can provide some drawbacks, such as:

- Inaccurate positioning depending on where we are, typically with accuracy of ~4.9 m (16ft). Furthermore, environmental factors could add noise to GPS localization, which explains why in urban areas and cities like Manhattan with high buildings, our phone’s maps would have poor performance.

- Low frequency: 10 hertz or 10 updates per second, which means it takes 0.1 second for GPS to give an update. Since autonomous cars move quickly though, there is a need to update more often than this.

To reduce such drawback and minimize error, a Real Time Kinematic (RTK) positioning technique is incorporated in the equation, as shown on the left image.

RTK involves setting up several base stations on the ground, where each base has a ground truth location (x1, y1). With the output from GPS (x2, y2), we can calculate the distance error that we then send to the GPS receivers, such as a car, to adjust their measurement.

Overall, RTK will use signals from a nearby fixed base station to measure these errors and transmit them to the vehicle. The use of an RTK correction network can allow for accuracies of within less than 10 centimeters on average. However, there’s always a room for improvement that can account for errors when transmitted signals from space are affected by imprecision in the satellite orbit, satellite clock errors, and atmospheric disturbances.

2. Inertial Measurement Unit (IMU)

An Inertial Measurement Unit (IMU) is a sensor device that directly measures a vehicle’s three linear acceleration components and three rotational rate components (and thus its six degrees of freedom).

In contrast to camera, LiDAR, radar and ultrasound sensors, IMU is a sensor that requires no information or signals from outside a vehicle. This environment independence makes IMU a core technology for both safety and sensor-fusion.

IMU measures the forces of acceleration (gravity and motion) and the angular rates of the vehicle. When combined with a GNSS receiver, IMU can provide a complete positioning solution to accurately determine a vehicle’s position and attitude. When the GNSS signal isn’t available, IMU measures the vehicle’s motion and estimates its position until the GNSS receiver can again access the satellites and recalculate the position.

An IMU consists of two core parts:

- Accelerometer, which outputs linear acceleration signals on three axes in space

- Gyroscope, which outputs angular velocity signals on three axes in space.

The IMU helps provide “localization” data, which is the information about where the car is. Software implementing driving functions combines this information with map and perception stack data that tell the car about objects and features around it, which we will discuss further in the next part of this series. Perception stack is essentially the brains behind autonomous vehicles. We can think of it as a collection of hardware and software components consolidated into a platform that can handle end-to-end vehicle automation.

Perception stack keywords: perception, data fusion, cloud/OTA, localization, behavior, control and safety.

One of the many challenges with IMU is that motion error increases with time, therefore we have to combine IMU with low update frequency GNSS/GPS to compensate for location error in IMU. The combination of these two does create a more robust localization result, but it still won’t solve the localization problem completely.

3. Light Detection And Ranging Sensor (LiDAR)

LiDAR, which stands for Light Detection and Ranging, is an active remote sensing method that uses light in the form of a pulsed laser to measure ranges (variable distances) to the vehicle’s surrounding. These light pulses, combined with other sensor data can generate precise, three-dimensional information about the shape of objects and landmarks surrounding the cars, as well as their surface characteristics — as shown below.

LiDAR instrument principally consists of a laser, a scanner, and a specialized GPS receiver (NOAA of U.S. Department of Commerce). LiDAR sensors continuously rotate and generate thousands of laser pulses per second. These high-speed laser beams from LIDAR are continuously emitted in the 360-degree surroundings of the vehicle and are reflected by the objects in the way.

With use of complex machine learning algorithms, the data received through such activity is converted into real-time 3D graphics, which are often displayed as 3D images or 3D maps of the surrounding objects — in an also 360-degree field of view that helps the car drive in various road types and physical conditions.

LIDAR-based systems are highly accurate in object detection and recognition of 3D shapes, even for longer distances (~100–200 meters). LIDAR system’s 3D mapping capability also helps in differentiating between cars, pedestrians, trees, people, or other objects, while also calculating and sharing details of their velocity in real time.

To localize a vehicle with LiDAR, a means of point cloud matching is used, where LiDAR sensors with an HD maps comparison yields global position of the vehicle. Iterative Closest Point (ICP) [Besl & McKay, 1992] is often used to minimize the error when comparing between points from sensor scans with those from the HD map. As its name suggests, the idea is to iterate through each data point to find alignment. These points converge if starting positions are close enough.

While this give LiDAR localization an advantage of robustness given HD maps and sensors data, some of the main difficulties of using LiDAR is constructing the HD maps and having it constantly updated with transient elements, like pedestrians, cars, and bikes, as they are constantly changing the next time the vehicle passes through. Another disadvantage is that, the laser’s wavelength can also be affected by poor weather and temperature, as the signal-to-noise ratio could affect the sensors in the LiDAR detector. LiDAR, overall, is highly expensive and requires more space to implement on cars, so it also tends to make self-driving cars look a little bulkier.

The aforementioned disadvantages are some of the reasons LiDAR is used in coordination with cameras for the application of self-driving cars — which takes us to our next point.

4. Camera(s)

Camera images are indeed the easiest type of data to collect. To use them in vehicle localization, 3D map and GPS data are also incorporated for visual matching, such as lane lines or dynamic objects matching.

While cameras can be more reliable as a visioning system compared to LiDAR, they don’t have the range detecting feature like LiDAR does. That’s why, companies like Tesla that does not depend on LiDAR in their vehicle’s autopilot system, uses other sensors like radar and ultrasonic sensor to detect range and distance.

It’s important to note that while image data is easy to obtain, the primary disadvantage with cameras is their lack of 3D information and the need to rely on 3D map.

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.io/2021/05/30/understanding-localization-in-self-driving-cars%e2%80%8a-%e2%80%8aand-its-technology/