From Model-centric to Data-centric Artificial Intelligence

Original Source Here

From Model-centric to Data-centric Artificial Intelligence

Is all the craze over new and more complex model architectures justified ?

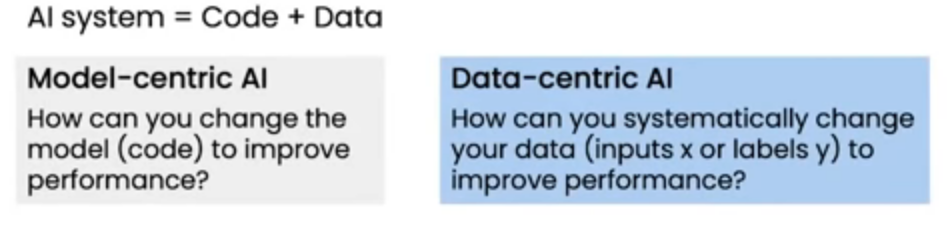

Two basic components of all AI systems are Data and Model, both go hand in hand in producing desired results. In this article we talk about how the AI community has been biased towards putting more effort in the model, and see how it is not always the best approach.

We all know that machine learning is an iterative process, because machine learning is largely an empirical science. You do not jump to the final solution by thinking about the problem, because you can no easily articulate what the solution should look like. Hence you empirically move towards better solutions. When you are in this iterative process, there are two major directions at you you disposal.

Model-Centric Approach

This involves designing empirical tests around the model to improve the performance. This consists of finding the right model architecture and training procedure among a huge space of possibilities.

Data-centric approach

This consists of systematically changing/enhancing the datasets to improve the accuracy of your AI system. This is usually overlooked and data collection is treated as a one off task.

Most machine learning engineers find this approach more exciting and promising, one reason being that it enables them to put their knowledge of machine learning models into practice. In contrast, working on data is sometimes thought of as a low skill task and many engineers prefer to work on models instead. But is this emphasis on models justified ? Why does it exist?

Model-centric Trends in AI community

Most people have been channeling major portion of their energies towards model centric AI.

One plausible reason is that AI industry closely follows academic research in AI. Owing to open source culture in AI , most cutting edge advances in the field are readily available to almost everyone with who can use github. Furthermore, tech giants fund and steer a good portion of research in AI so it remains relevant to solving real world problems.

Lately, AI research has been totally model-centric in nature ! This is because the norm has been to produce challenging and big datasets which become widely accepted benchmarks to access performance on a problem. Then follows a race amongst academics to achieve state of the art on these benchmarks ! Since, we have already fixed the state of dataset most of the research is channeled at model-centric approach. This creates a general impression in the community that model-centric approach is more promising.

Examining a sample of recent publications revealed that 99% of the papers were model-centric with only 1% being data-centric. ~ Andrew NG

Importance of Data

Though machine learning community lauds the importance of data and identify large amounts of data as a major driving force behind success of AI. It sometimes gets sidelined in the life cycle of ML projects. In his recent talk, Andrew NG points out how he thinks data centric approach to be more rewarding and calls for shift towards data-centrism in the community. As an example he relates few projects and how data-centric approach had been more successful.

Tools at your disposal in data-centric approach

More data is always not equivalent to better data. Three major aspects of data are:

Volume

Amount of data is very important, you need to have sufficient amount of data for you problem. Deep Networks are low bias high variance machines and we believe that more data is the answer to variance problem. But the approach of blindly amassing more data can be very data efficient and costly.

How is it that humans can learn to drive a car in about 20 hours of practice with very little supervision, while fully autonomous driving still eludes our best AI systems trained with thousands of hours of data from human drivers? ~ Yann Lecun

Before embarking on journey to find new data, we need to answer the question of what kind of data we need to add. This is usually achieved through error analysis of the current model. We talk about this in detail later.

Consistency

Consistency in data annotation is very important, because any inconsistency can derail the model and also make you evaluation unreliable. A new study reveals that about 3.4% of examples in widely used datasets were mislabeled, and they also find that the larger models are effected more by this.

If the accuracy cannot not be measured without the error pf +=3.4%, this raises major questions about plethora of research papers that beat previous benchmarks by a percent or two ! Thus for better training and reliable evaluation we need consistently labelled dataset.

The above example highlights how easily inconsistency in human labeling can creep into you data set. None of the annotations above are wrong, they are just inconsistent with each other and can confuse the learning algorithm. Thus we need to carefully design annotation instructions for consistency. It is necessary for the machine learning engineer to be fully acquainted with the dataset, I find it helpful to:

- Annotate a small sample of dataset myself before formulating instructions to get a better idea of possible errors an annotator can make.

- Review a random batch of annotated data to make sure everything is as expected. This should be made a standard practice for each annotation job, because annotation teams are constantly changing and it is easy for mistakes to occur even in later stages of the project.

- For tasks where we keep finding inconsistencies even after reviewing the instructions, it can be desirable to get data annotated by multiple people and use majority vote as ground truth.

Quality

Your data should be representative of the data that you expect to see in deployment, covering all variations that deployment data will present. Ideally, all attributes of data which are not causal features, should be adequately randomized. Common flaws in datasets that you should be mindful of are:

- Spurious correlations: when a non-causal attribute has association with the label, neural networks fails to learn the intended solution. For example in an object classification dataset, if cows normally appear in the grassland, model might learn to associate background with class. Learn more about this in: Neural Networks fail on data with Spurious Correlation

- Lack of variation: when a non-causal attribute like image brightness fails to vary sufficiently in the dataset, neural network can overfit to the distribution of that attribute, failing to generalize well. Models trained in day data will fail to do well in dark and vice versa. Learn more about this problem here: Striking failure of Neural Networks due to conditioning on totally irrelevant properties of data

Apart from collecting new data with more variation, data augmentation is an excellent strategy to break spurious correlations and lack of variation problems.

Systematic Data-centric Approach

Generally accepted workflow for machine learning projects is shown above. Where analysis of both training and deployment results can result in another phase of data collection and model training. Observing and correcting for issues in test data is a decent strategy. But it only solves the problems that have been observed through error analysis and does not safeguard against future problems. The same spurious correlation that exist sin training data might also be present in test data, which is highly like in they are randomly split.

We need a more proactive approach towards model robustness in unseen environments. To better understand the relaitonship between causality invariance and robust deep learning read this article: Invariance, Causality and Robust Deeplearning

Read my next article to learn a structured approach, spanning from problem definition to network evaluation, that can be used to train robust domain invariant models. Systematic Approach to Robust Deep Learning

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.io/2021/05/09/from-model-centric-to-data-centric-artificial-intelligence/