Understand Time Series Components with Python

Original Source Here

Understand Time Series Components with Python

Basic concepts for forecasting models in machine learning with example

In this article, we will discuss time series concepts with machine learning examples that deal with the time component in the data.

Forecasting is so much important in the banking sector, weather, population prediction, and many more that directly deals with real-life problems.

Time series models are based on a function of time. The measurements are in regular intervals of time where time be an independent variable for modeling.

Z = f(t)

Z is the values Z1, Z2……Zn and “t” are the times at T1, T2….Tn intervals.

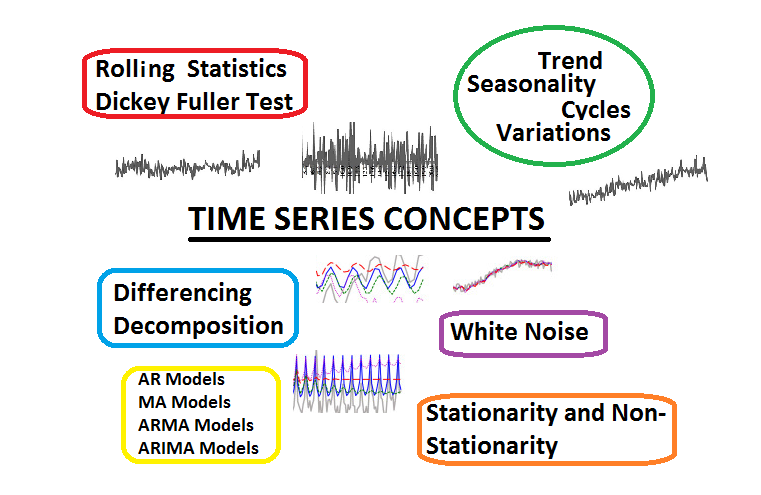

Topics to be covered:

- Components of Time Series

- White Noise

- Stationary and Non-Stationary

- Rolling Statistics and Dickey-Fuller test

- Differencing and Decomposition

- AR, MA, ARMA, ARIMA models

- ACF and PACF

The application of time series analysis is Eg: Daily petrol price, profits made by a company, quarterly house sales.

The capability of time series analysis

- It is an effective method for forecasting decisions.

- They are used to predict uncertain future to help the organizations.

- It is used to analyze the behavior of data when combining with data mining techniques.

Components of Time Series

There are some basic definitions and concepts before we start modeling our time series data. Mainly, there are four types of components majorly see in time series as discussed below:

It is a movement of data values that decrease or increase with time. There can be an upward trend and downward trend of any data series.

The upward trends are those that increase with time. The downward trends are those that decrease with time.

Example with Python:

#to make a month column with added day

data['Month'] = data['Month'].apply(lambda x: dt(int(x[:4]),

int(x[5:]), 15))data = data.set_index('Month')

data.head()

Now, plotting the line chart to see the trend.

ts = data['#Passengers']

plt.plot(ts)

Sometimes when the index is not a date-time data type then it is necessary to make a date-time as an index column so, that all the features become a function of time.

For example:

df.month = pd.to_datetime(df.month)

df.set_index('month', inplace=True)

Seasonality is a repeated behavior of data that occurs on the regular interval of time. It means that there are patterns that repeat themselves after some interval of the period then we call it seasonality.

For example, in the above plot, the down peak and high peak are coming at regular intervals of time.

The difference between seasonality and cycles is that seasonality is always has a fixed and a known frequency. The cycles also have rise and fall peaks but not a fixed frequency or at least a two-year duration.

- Variations and Irregularities:

The variation and irregular patterns are not fixed frequency patterns and they are or short duration and non-repeating.

White Noise

The white noise is a term that belongs to that part of the pattern in time series where we can not do prediction/forecast because there is no correlation dependence of the next value to the previous value, that part of the pattern have zero mean and constant variance.

The main point noted here is that the white noise has zero mean and constant variance so, it means that the data points will be a Gaussian white noise with standard normal distribution. But as I studied various links, I noticed that the white noise can be a uniform distribution not normal distribution.

For example:

import numpy

import matplotlib.pyplot as pltmean_value = 0

std_dev = 1

no_of_samples = 500

time_data = numpy.random.normal(mean_value, std_dev, size=no_of_samples)plt.plot(time_data)

plt.show()

It is also true that the white noise is random data with different frequencies so, it can be any distribution. On the other side, the normal distribution comes under continuous white noise in the discrete process.

For example:

import numpy as np

import seaborn as sns#mean value and standard deviation value

mean, std = 0, 1#Normal distribution with 5000 samples

samples = np.random.normal(mean, std, size=5000)#plotting normal distribution with seaborn library

sns.distplot(samples, bins=20, hist_kws={'edgecolor':'red'})

Stationary and Non-Stationary

The stationary time series is a period with a time that has a constant mean, no change in the variance, and no change over time in auto-correlation. These are the criteria that have to be fulfilled to make a model.

The non-stationary time series is a period in which the mean, variance, and auto-correlation are time-variant.

If the series is not stationary then we make the data to be stationary with some method or test.

Points to achieve stationarity after transformation as shown below:

- If data is not stationary then we can take the difference of the series with one less point in the new series.

- If the data shows a trend then we can take another curve to convoluted with the data and take out the residuals.

- If the variance is time-variant then we can take the square-root or logarithm to stabilize the variance.

Rolling Statistics and Dickey-Fuller test

These two tests are performed to check the stationarity of the time series.

The Rolling statistics is that we check of moving mean and moving variance of the series that it varies with time or not. It is a kind of visual type test result.

The Dickey-Fuller test is a type of hypothesis test in which the test statistic value is smaller than the p-value then we will reject the null hypothesis. The null hypothesis in this is time series is non-stationary.

def test_stationarity(timeseries):

#Determing rolling statistics

rolmean = timeseries.rolling(window=52,center=False).mean()

rolstd = timeseries.rolling(window=52,center=False).std() #Plot rolling statistics:

orig = plt.plot(timeseries, color='blue',label='Original')

mean = plt.plot(rolmean, color='red', label='Rolling Mean')

std = plt.plot(rolstd, color='black', label = 'Rolling Std')

plt.legend(loc='best')

plt.title('Rolling Mean & Standard Deviation')

plt.show(block=False)

#Perform Dickey-Fuller test:

print ('Results of Dickey-Fuller Test:')

dftest = adfuller(timeseries, autolag='AIC')

dfoutput = pd.Series(dftest[0:4], index=['Test Statistic','

p-value','#Lags Used','Number of Observations Used'])

for key,value in dftest[4].items():

dfoutput['Critical Value (%s)'%key] = value

print (dfoutput)test_stationarity(data['#Passengers']

Differencing and Decomposition

Suppose we found our time series is non-stationary then there are two techniques to make our data into a good amount of stationarity time series.

- Differencing: It is used to convert the trend, non-stationary into stationary series and control the auto-correlation. It is used to calculate the difference between the past value to the current value.

Over differenced series can produce inaccurate estimates. So, this does not opt for all the cases.

- Decomposition: It is performed on the series by regressing the series and taking the residual from the regression.

AR, MA, ARMA, ARIMA models

There are different models to fit time series data in modeling.

- AR model: It is an Auto-Regressive model to predict future values based on a weighted sum of past values.

It is used for forecasting when there is a correlation between values in a time series and the preceding and succeeding values.

- MA model: It is a moving average model used for forecasting future values of a time series that depends only on random error terms.

For example:

#transformation

ts_log = np.log(ts)plt.plot(ts_log)#calculating moving average

MA = ts_log.rolling(window=12).mean()

movingSTD = ts_log.rolling(window=12).std()

plt.plot(ts_log)

plt.plot(MA, color='red')

- ARMA model: It is an Autoregressive and Moving Average model used to predict future values using both past data and error terms. The auto-regressive tells the mean and momentum in trading markets. MA part captures the shock effects observed in the white noise terms.

- ARIMA model: It is an auto-regressive integrated moving average are a class of statistical models used to forecast and analyze time-series data. It helps to make skillful time-series forecasts. It is a generalization of the simple ARMA model and adds the notion of integration.

Before the ARIMA model, we can make difference from the moving average. If there are no differencing (d=0) then the model usually referred to as ARMA models.

ts_log_mv_diff = ts_log - MA

ACF and PACF

- ACF: It is an auto-correlation function of time series to identifies the order of the MA process. It is the coefficient of correlation between the value of a point at a current time and its value at lag p.

- PACF: It is a partial autocorrelation function to identifies the order of the AR process.

For example for ACF:

plt.plot(np.arange(0,11), acf(ts_log_mv_diff, nlags = 10))

plt.axhline(y=0,linestyle='--',color='gray')

plt.axhline(y=-7.96/np.sqrt(len(ts_log_mv_diff)),linestyle='--

',color='gray')plt.axhline(y=7.96/np.sqrt(len(ts_log_mv_diff)),linestyle='--

',color='gray')plt.title('Autocorrelation Function')

plt.show()

The ACF curve crosses the upper confidence value when the lag value is between 0 and 1. So, “0” or “1” be an optimal value for the ARIMA model.

For example for PACF:

plt.plot(np.arange(0,11), pacf(ts_log_mv_diff, nlags = 10))

plt.axhline(y=0,linestyle='--',color='gray')

plt.axhline(y=-7.96/np.sqrt(len(ts_log_mv_diff)),linestyle='--

',color='gray')plt.axhline(y=7.96/np.sqrt(len(ts_log_mv_diff)),linestyle='--

',color='gray')plt.title('Partial Autocorrelation Function')

plt.show()

The PACF curve drops to 0 between lag values 1 and 2. So, “0” or “1” be an optimal value for the ARIMA model.

To fit the ARIMA model as shown in the example below:

model = ARIMA(ts_log, order=(1, 1, 0))

results_ARIMA = model.fit(disp=-1)

plt.plot(ts_log_mv_diff)

plt.plot(results_ARIMA.fittedvalues, color='red')

plt.title('RSS: %.4f'% sum((results_ARIMA.fittedvalues[1:] - ts_log_mv_diff)**2))

To find the predictions in time series with ARIMA example are shown below:

predictions_ARIMA_diff = pd.Series(results_ARIMA.fittedvalues, copy=True)predictions_ARIMA_diff_cumsum = predictions_ARIMA_diff.cumsum()predictions_ARIMA_log = pd.Series(ts_log.ix[0], index=ts_log.index)predictions_ARIMA_log = predictions_ARIMA_log.add(predictions_ARIMA_diff_cumsum,fill_value=0)predictions_ARIMA = np.exp(predictions_ARIMA_log)

plt.plot(ts)

plt.plot(predictions_ARIMA)

plt.title('RMSE: %.4f'% np.sqrt(sum((predictions_ARIMA-ts)**2)/len(ts)))

The orange curve is our prediction.

Conclusion:

When we do time series modeling, sometimes models are good at predicting the trend but fails in seasonality. This article is for a beginner person to learn time series basic concepts with no proper modeling but with a python example.

I hope you like the article. Reach me on my LinkedIn and twitter.

Recommended Articles

1. NLP — Zero to Hero with Python

2. Python Data Structures Data-types and Objects

3. Exception Handling Concepts in Python

4. Principal Component Analysis in Dimensionality Reduction with Python

5. Fully Explained K-means Clustering with Python

6. Fully Explained Linear Regression with Python

7. Fully Explained Logistic Regression with Python

8. Basics of Time Series with Python

9. Data Wrangling With Python — Part 1

10. Confusion Matrix in Machine Learning

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.io/2021/04/10/understand-time-series-components-with-python/