Object detection on your images using SSD Mobilenet

Original Source Here

Object detection using SSD Mobilenet

Introduction

Object Detection is a common Computer Vision problem which deals with identifying and locating objects of certain classes in the image. Interpreting the object localization can be done in various ways, including creating a bounding box around the object or marking every pixel in the image which contains the object (called segmentation). Object detection was studied even before the breakout popularity of CNNs in Computer Vision. While CNN’s are capable of automatically extracting more complex and better features, taking a glance at the conventional methods can at worst be a small detour and at best an inspiration. Object detection before Deep Learning was a several-step process, starting with edge detection and feature extraction using techniques like SIFT, HOG, etc. These images were then compared with existing object templates, usually at multi-scale levels, to detect and localize objects present in the image.

Architecture

SSD (Single shot detector)

Single shot detectors (SSDs) seek a middle ground. Rather than using a subnetwork to propose regions, SSDs rely on a set of predetermined regions. A grid of anchor points is laid over the input image, and at each anchor point, boxes of multiple shapes and sizes serve as regions. For each box at each anchor point, the model outputs a prediction of whether or not an object exists within the region and modifications to the box’s location and size to make it fit the object more closely. Because there are multiple boxes at each anchor point and anchor points may be close together, SSDs produce many potential detections that overlap. Post-processing must be applied to SSD outputs in order to prune away most of these predictions and pick the best one. The most popular post-processing technique is known as non-maximum suppression.

For an object’s location, the most commonly-used metric is intersection-over-union (IOU). Given two bounding boxes, we compute the area of the intersection and divide it by the area of the union. This value ranges from 0 (no interaction) to 1 (perfectly overlapping). For labels, a simple “percent correct” can be used.

MobileNet

The MobileNet model is based on depthwise separable convolutions which is a form of factorized convolutions that factorize a standard convolution into a depthwise convolution and a 1×1 convolution called a pointwise convolution. For MobileNets the depthwise convolution applies a single filter to each input channel. The pointwise convolution then applies a 1×1 convolution to combine the outputs of the depthwise convolution. A standard convolution both filters and combines inputs into a new set of outputs in one step. The depthwise separable convolution splits this into two layers, a separate layer for filtering and a separate layer for combining. This factorization has the effect of drastically reducing computation and model size.

Depthwise separable convolution is made up of two layers: depthwise convolutions and pointwise convolutions. We use depthwise convolutions to apply a single filter per each input channel (input depth). Pointwise convolution, a simple 1×1 convolution, is then used to create a linear combination of the output of the depthwise layer. MobileNets use both batch norm and ReLU nonlinearities for both layers.

where ^K is the depthwise convolutional kernel of size DK×DK×M where the mth filter in ^K is applied to the mth channel in F to produce the mth channel of the filtered output feature map ^G. Depthwise convolution has a computational cost of:

an additional layer that computes a linear combination of the output of depthwise convolution via 1×1 convolution is needed in order to generate these new features. Depthwise separable convolutions cost:

which is the sum of the depthwise and 1×1 pointwise convolutions.

Non-Maximum suppression

Non Maximum Suppression (NMS) is a technique used in many computer vision algorithms. It is a class of algorithms to select one entity (e.g. bounding boxes) out of many overlapping entities. The selection criteria can be chosen to arrive at particular results. Most commonly, the criteria are some form of probability number along with some form of overlap measure (e.g. IOU).

SoftMax

Softmax returns a probability distribution over the target classes in a multiclass classification problem. The Softmax function allows us to express our inputs as a discrete probability distribution. Mathematically, this is defined as follows:

for each value (i.e. input) in our input vector, the Softmax value is the exponent of the individual input divided by a sum of the exponents of all the inputs. This ensures that multiple things happen:

- Negative inputs will be converted into nonnegative values, thanks to the exponential function.

- Each input will be in the interval (0,1).

- As the denominator in each Softmax computation is the same, the values become proportional to each other, which makes sure that together they sum to 1.

Use cases and applications

In this section, we’ll provide an overview of real-world use cases for object detection. We’ve mentioned several of them in previous sections, but here we’ll dive a bit deeper and explore the impact this computer vision technology can have across industries.

Video surveillance

Because state-of-the-art object detection techniques can accurately identify and track multiple instances of a given object in a scene, these techniques naturally lend themselves to automated video surveillance systems.

For instance, object detection models are capable of tracking multiple people at once, in real-time, as they move through a given scene or across video frames. From retail stores to industrial factory floors, this kind of granular tracking could provide invaluable insights into security, worker performance and safety, retail foot traffic, and more.

Crowd counting

Crowd counting is another valuable application of object detection. For densely populated areas like theme parks, malls, and city squares, object detection can help businesses and municipalities more effectively measure different kinds of traffic — whether on foot, in vehicles, or otherwise.This ability to localize and track people as they maneuver through various spaces could help businesses optimize anything from logistics pipelines and inventory management, to store hours, to shift scheduling, and more. Similarly, object detection could help cities plan events, dedicate municipal resources, etc.

Anomaly detection

Anomaly detection is a use case of object detection that’s best explained through specific industry examples. In agriculture, for instance, a custom object detection model could accurately identify and locate potential instances of plant disease, allowing farmers to detect threats to their crop yields that would otherwise not be discernible to the naked human eye. And in health care, object detection could be used to help treat conditions that have specific and unique symptomatic lesions. One such example of this comes in the form of skincare and the treatment of acne — an object detection model could locate and identify instances of acne in seconds.

Self-driving cars

Real-time car detection models are key to the success of autonomous vehicle systems. These systems need to be able to identify, locate, and track objects around them in order to move through the world safely and efficiently. And while tasks like image segmentation can be (and often are) applied to autonomous vehicles, object detection remains a foundational task that underpins current work on making self-driving cars a reality.

Object detection

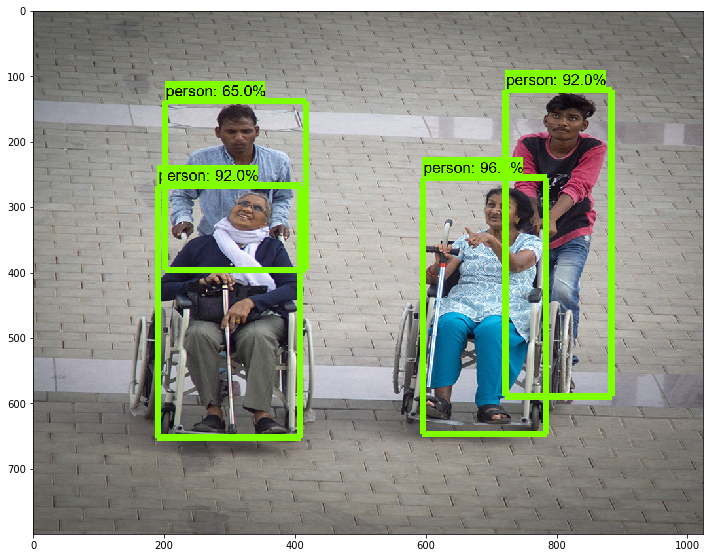

MobileNet can also be deployed as an effective base network in modern object detection systems. I have used a pre-trained SSD mobilenet model on the coco dataset for object detection. SSD is evaluated with 300 input resolution (SSD 300) and using mobilenet as a base network.

From mobilenet architecture, all the features are predicted from depthwise convolution, and those vectors are passed on to the detection algorithm.

From SSD architecture all the bounding boxes are detected with their locations including a background as 1 class. The formula for prediction is

Df x Df x M ((no of objects +1)+4)).

After all the features are detected and their respective bounding box is created Non-maximum suppression is done so that if there are any objects detected in the overlapping regions it will generate a threshold and discard the ones not requires and only most likely predictions are retained.

From there all the outputs are generated. I took the same algorithm and applied it to the webcam feed to detect objects. I used the OpenCV library to access the webcam and save the video file in .avi format.

Conclusion

I used SSD to speed up the process by eliminating the region proposal network. By this, there is a drop in accuracy so I combine the MobileNet and SSD to get better accuracy.

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.io/2021/04/29/object-detection-on-your-images-using-ssd-mobilenet/