Getting To Know Your Data — Part 2 (Seeing Data Through the “ Data Iris”)

Original Source Here

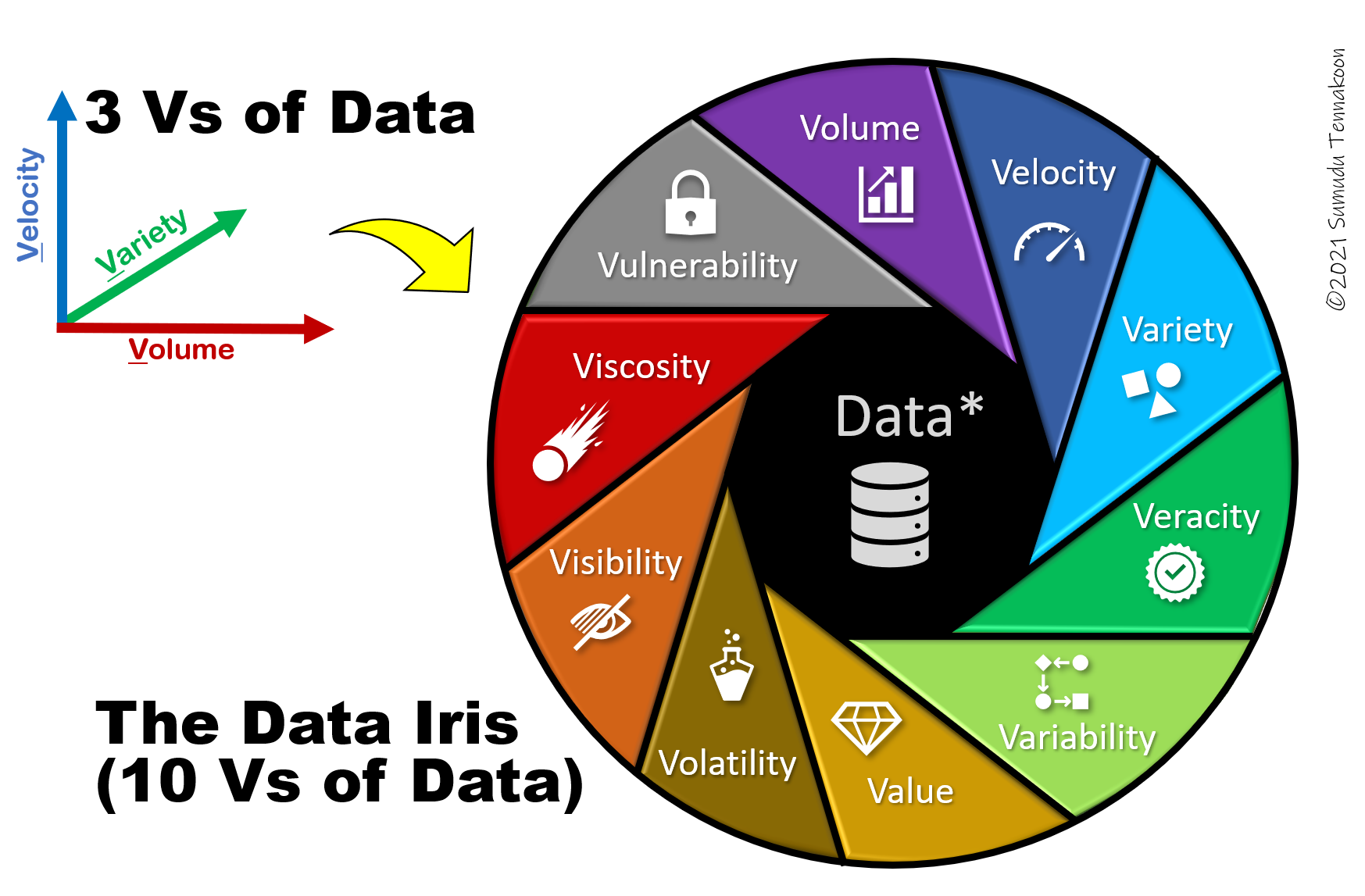

The Data Iris (Beyond 3Vs of Data)

Since the 3Vs have established their ground as a metric in the data science/big data community, several organizations and authors extended that by adding more perspectives [1–6]. As a result, 4 Vs of data (adding Veracity) 5 Vs of Data (adding Value), 8 Vs of Data (adding Visualization, Variability, and Volatility) emerged in the literature. Several authors also extended it further into 10 Vs of big data.

After reviewing the previous publications, consolidation of some terms, and adding the new perspective to security and compliance, we can construct a metric of 10 Vs to describe the qualities of data which we can assembly into the concept of “Data Iris”. The word iris is meaningfully chosen here which was borrowed from human anatomy where the iris in our eye is responsible for controlling the amount of light reaching the retina.

When we look at our data through this Data Iris and intuitively maneuvering the components, we should see our data better than ever. Let’s keep exploring the other Vs making the Data Iris.

4. Veracity

We may sufficiently handle the challenges of the volume and velocity of the data. Still, we need to make sure the data can be trusted, accurate, free of ambiguity, and quality is maintained through data collection and storage. A data-driven organization needs to invest heavily and apply adequate strategies in maintaining the veracity of data to get the best use out of it. Consuming untrustworthy, inaccurate, or poorly sourced data may reduce veracity, which can lead to depreciating the value of the products and may pose elevated challenges keeping up with compliance and regulations.

5. Variability

The characteristics of data can vary throughout its usage or transmission. The rate of data accumulation or transmission can vary based on the circumstances. The form of data can be changed, the nature of data sources can be changed, data itself can transform into different formats within the organization. Data practitioners in an organization need to keep up with these changes and address them accordingly to avoid potential impact on the applications, products, or business operations.

6. Value

Data has become an important asset to both individuals and organizations (governments, industry, educational and research institutes, manufacturers, etc.). Some people recognize today; we are in a new gold rush era where the data replace the gold in the game.

Like unprocessed gold take out from a mine, data has less value in its raw form but has a great potential to add value based on how it is managed, processed, analyzed, presented, and preserved by the miners (in this case, data professionals).

We know when the gold is purified and used to make a jewel, the value of the end product multiplies significantly than the gold in raw form. The same happens when data is properly to used to derive solutions to scientific, health, social, and business problems (such as generating insights, descriptive analytics, predictive analytics, machine learning, etc.). The data analytics solutions embedded in an application or product amplifies its value, quality, and usefulness.

The value of data goes up with accuracy, completeness, and accessibility. It goes down if the data is not offered timely, out of context, or the data is commonly available.

As an example, think of a list of person names you got in an excel file. Is it valuable data? Maybe not without proper context (e.g., list of people attending an event). How about adding another column (data point) with phone numbers. Now the value of the data you have will increase significantly given that the name to phone number assignments is accurate (i.e., real phone numbers we can reach those individuals) and complete (we can find phone numbers for a significant portion of the records). The value of this data is getting elevated when more data points (e.g., email address, age, hometown, profession, etc.) are added.

7. Volatility

Like almost everything in the universe, data has its own lifetime. It could be the time till the storage runs out of energy or till the time it wipes out from the storage. However, data also has a lifetime based on its validity.

Depending on the application, this lifetime can be defined by the policies, laws, or regulations. Sometimes, the value of data is significantly depreciated where the data becomes no longer relevant or useful. Some data science professionals can argue that data will never become irrelevant in general, and it can be valuable for future applications requiring historical data.

Data volatility will lead to making some organizational decisions such as how much we should invest in preserving this data (storage, backup, etc.) or when we should get rid of them.

8. Viscosity

When the data feed into a system at a higher rate (velocity) and the data sources and formats are different from each other (variety), internal friction can be developed from the resistance created during the data integration processes. This resistance can be amplified when different tools and technologies are used within the same organization to store and transmit data, such as local DB server vs. cloud hosting. The mix and match of different data sources, formats, tools, and technologies can be seen in data projects on any scale. Viscosity can also get amplified by human factors such as decision-making and availability of expertise.

When the data is used for real-time analysis or applications such as creating summaries, visualizations, dashboards using streaming data, the viscosity plays a greater role. Therefore it needs to be mitigated using proper methods and tools for data loading/retrieval and processing.

9. Visibility

One critical challenge with the digital transformation is protecting sensitive data from adversaries who intended to misuse data for their malicious intents or harm the entities attached to the data assets. To make everyone participate in a digital data platform be comfortable, we need to establish some standards, policies, and regulations on accessibility and visibility of data assets. As a result, restrictions can be imposed on who can access particular data assets, from which locations or networks they can be accessed.

Visibility is directly related to privacy and data protection. For example, when we get to work with a data asset, we need to know our limits, restrictions, and boundaries to use the data available to us responsibly without exploiting it for a short-sighted gain.

What parts of data can we use in our work? What parts of it can we share with the work products? What are the methods (e.g., aggregating, anonymizing, masking, excluding, etc.) and tools we can use to do the data projects in compliance with the data privacy policies, laws, and regulations (e,g, GDPR [15], CCPA [16])? are important questions to be answered concerning the data visibility.

10. Vulnerability

We previously discussed how the data is strongly tight to our lives and livelihood and realized that bond increases with time. Similarly, we looked at an analogy saying, “data is the new gold.” When data or data-oriented systems are handled irresponsible manner or left without proper protection, it can bring serious harm to individuals, communities, and nations.

The recent data breaches and various other attacks on organizational data infrastructures remind us of the importance of having our data-driven applications, storage platforms, and communication networks protected from physical and virtual attacks by adversaries. Therefore, in all data-related work, we should be more cautious and proactive about the vulnerabilities they possess.

Properly assessing vulnerabilities and addressing them in the early stages of a data project is a crucial task regardless of the scale or domain of that project. However, in real-world data projects, key challenges are addressing the vulnerability.

Organizations face higher demand for the fast delivery of their products to need market needs while allocating less attention and resources (money and time) on addressing vulnerability is a common scenario. Another constrain is the lack of expertise within the organization. When the decision-makers and executives are illiterate or shortsighted on vulnerability issues, they could take their organizations on a destructive path.

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.io/2021/04/20/getting-to-know-your-data%e2%80%8a-%e2%80%8apart-2-seeing-data-through-the-data-iris/