Tokenization and numericalization are the different things to solve the same problem?

Original Source Here

Tokenization and numericalization are the different things to solve the same problem?

Introduction

Preprocessing is one of the most important tasks that have to done to be able to train the model. Training the model as the process really depends on hardware only as long as you selected a well-optimized transfer learning model while preprocessing along with dataset prep counts for 90% of the work of every Machine Learning engineer.

In this article, we are going to learn about two key preprocessing operations in natural language processing Tokenization and Numericalization with a major focus on the former one as this has to deal with how we handle semantic units like words, phrases, and sentences.

Disclaimer: The article is prepared with a close relation to the content taught in the fast.ai course, particularly in:

Let’s layout a high overview of the Language Model

Most Natural Language Processing problems can be solved through transfer learning, where you start with a general language model and then fine-tuning it a specific text corpus followed by adapting it for even more specific classification problem like sentiment analysis.

A language model is a model that has been trained to guess what the next word in a text is (having read the ones before). To properly guess the next word in a sentence is not a trivial task as the model has to develop an understanding of the language itself.

PS. Before the transfer learning for NLP was introduced, the language models were based on n-gram. The drawback of using this approach, of representing text as tokens is that, essentially, it cannot understand English. The structure of the sentence is not really taken into consideration, only the frequency of the words is used. It does not know the difference between “I want to eat a hot __” and “It was a hot ___”.

Even if our language model knows quite a lot about the language we are using for our task, adapting it to the specific corpus is very crucial because the specific corpus will have its own language style like formal or informal as well as very specific terms that you won’t meet outside the subject domain. Doing transfer learning and fine-tuning to specific corpus results in significantly better prediction, and is called Universal Language Model Fine-tuning (ULMFit) approach (Fig 1.)

Getting hotter with text preprocessing

To build a language model is essentially to answer “how to predict the next word of a sentence using a neural network?”

Putting simply, we combine the text corpus into one big long string and split it into words, giving us a very long list of words. Our independent variable will be the sequence of words starting with the first word in our very long list and ending with the second to last, and our dependent variable will be the sequence of words starting with the second word and ending with the last word.

Our vocab will consist of a mix of common words that are already in the vocabulary of our pretrained model and new words specific to our corpus. Our embedding matrix (the parameters that need to be optimized for the model to be satisfied to the certain metric criteria) will be built accordingly: for words that are in the vocabulary of our pretrained model, we will take the corresponding row in the embedding matrix of the pretrained model; but for new words we won’t have anything, so we will just initialize the corresponding row with a random vector (so it these numbers can be optimized for the NLP task)

“Converting the text into a list of words” — Tokenization

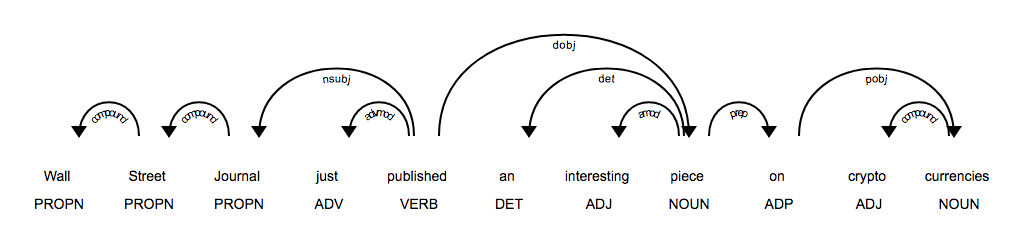

In a sense, tokenization means we are translating the original English language sequence into a simplified tokenized language — a language that is designed to be easy for a model to learn.

But what do we do with punctuation or with the word like “don’t”, hyphenated or chemical words, what about Chinese characters? That creates its own question about what the word really is!

To answer these questions there are different approaches you could go with:

- word-based

- subword-based

- character-based

A token is one element of a list created by the tokenization process. It could be a word, part of a word (a subword), or a single character.

The default English word tokenizer is called spaCy. It has a sophisticated rules engine with special rules for URLs, individual special English words, and much more.

Here is a great article that solely written to guide you through spaCy use cases:

Some tokens can be normal words but some can take a weird form like xxbos, which can mean, depending on what tokenization algorithm you are using, the start of a new text (“BOS” is a standard NLP acronym that means “beginning of stream”. By recognizing this start token, the model will be able to learn it needs to “forget” what was said previously and focus on upcoming words.

Another particularly interesting concept to consider is capitalization. A capitalized word can be replaced with a special capitalization token, followed by the lowercase version of the words, saving compute and memory resources, but can still learn the concept of capitalization.

Many of similar to those concepts mentioned above (BOS, capitalization) are embodied in preprocessing rules that can be applied to texts before or after it’s tokenized. For instance, fast.ai provides these rules:

For the documentation explaining each of the preprocessing rules, refer to:

Subword tokenization

The approach of using subword tokenization derives from the assumption that spaces provide a useful separation of components of meaning in a sentence. For example, languages like Chinese or Korean don’t use spaces, and in fact, they don’t even have a well-defined concept of a “word”.

There are also languages, like Russian, that can add many subwords together without spaces, creating very long words that include a lot of separate pieces of information.

To handle these cases, it’s generally best to use subword tokenization. This proceeds in two steps:

- Analyze a corpus of documents to find the most commonly occurring groups of letters. These become the vocab.

- Tokenize the corpus using this vocab of subword units.

When using fastai’s subword tokenizer, the special character _ represents a space character in the original text.

Subword tokenization becomes increasingly popular because, it provides a way to easily scale between character tokenization(i.e., using a small subword vocab) and word tokenization (i.e., using a large subword vocab), and handles every human language without needing language-specific algorithms to be developed. It can handle other “languages” such as genomic sequences or MIDI music notation.

Numericalization

The next process after splitting into tokens is numericalization, which is converting tokens to numbers to pass them to the neural network.

The steps are:

- Make a list of all possible levels of the vocabulary

- Replace each level with its index in the vocab.

After having numbers, we can put them in batches for our model training.

This concludes a high-level discussion around text preprocessing.

If you liked this article please press the heart button and leave the comments below. See you next time and happy learning.

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.wordpress.com/2021/01/31/tokenization-and-numericalization-are-the-different-things-to-solve-the-same-problem/