3D Buildings from Imagery with AI

Original Source Here

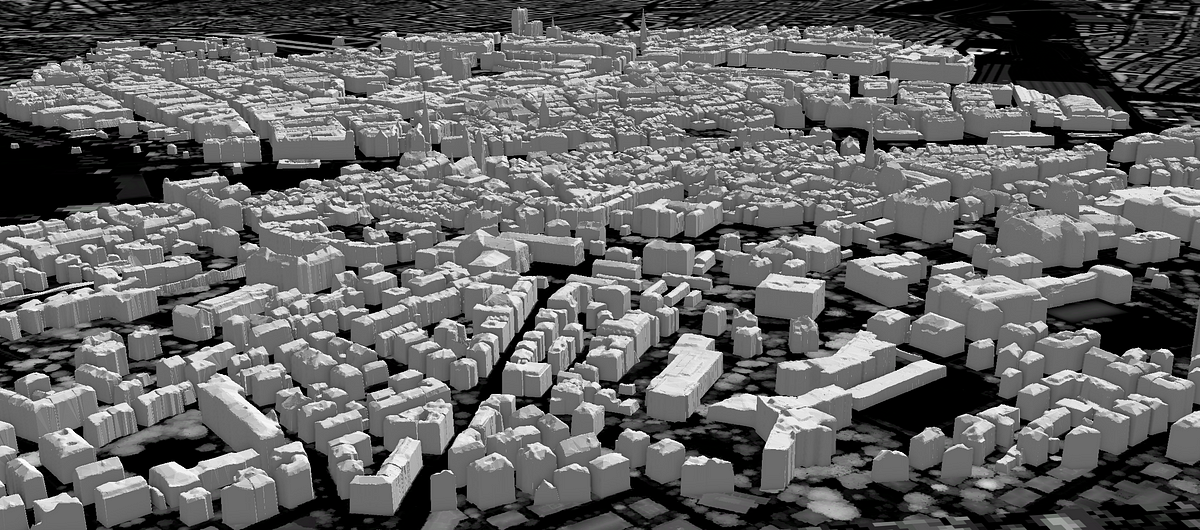

3D Buildings from Imagery with AI. Part 1: From Elevation Rasters

by Dmitry Kudinov, Camille Lechot, David Yu, Hakeem Frank

Recent advancements in artificial neural networks that focus on reconstructing 3D meshes from input 2D images show great potential and significant practical value in a multitude of GIS applications.

This series of posts describes our experiments with one such neural network architecture that we applied to reconstruct 3D building shells from various types of remotely sensed data.

This post, the first of the series, describes extracting buildings from elevation rasters, specifically, normalized digital surface model rasters.

Introduction

Modern municipal governments and national mapping agencies are evolving their traditional 2D geographic datasets into 3D interactive and realistically looking digital twins to optimize results from planning an analysis projects. For example, some local governments that are responsible for urban design, public events planning, safety, pollution monitoring, solar radiation potential assessment, etc. rely more and more on this kind of data. Cost-efficient and prompt acquisition of accurate 3D buildings is a vital to their success. In recent years, significant progress has been made in automating 3D building reconstruction workflows, yet the amount of manual labor spent on cleanup and quality control remains high, leading to slower adoption of the Digital Twin concept.

Can deep learning help in increasing the quality of reconstructions while reducing the labor cost? Let us see…

(…but if you are short on time and want to go straight to results, here is an interactive reconstruction)

Input: Normalized digital surface model rasters

A normalized digital surface model raster (nDSM) can be created by aggregating heights of points in a lidar point cloud — usually collected by an aircraft or a drone — into square bins/pixels fixed in size, such as 0.5m per pixel resolution. The result is a georeferenced single-band raster with “color” representing heights above, in case of a normalized raster, local ground. To learn more about creating a surface model rasters, see this imagery workflow and conversion tool.

Floating-point rasters like those shown above already contain enough semantics for crude 2.5D extrusions (Fig.2), but can we take it further from the quality standpoint with the help of artificial intelligence?

Output: LOD2 Building Models

Level of Detail (LOD) — can we, given the nDSM rasters above, automatically and efficiently produce LOD2 building models [1]?

Or maybe push the quality of extracted building models even further, say LOD2.1–2.3? [2]:

LOD2.0 is a coarse model with standard roof structures, and potentially including large building parts such as garages (larger than 4 m and 10 m^2 ).

LOD2.1 is similar to LOD2.0 with the difference that it requires smaller building parts and extensions such as alcoves, large wall indentations, and external flues (larger than 2 m and 2 m² ) to be acquired as well. In comparison to the coarser counterpart, modelling such features in this LOD could benefit use cases such as estimation of the energy demand because the wall area is mapped more accurately.

LOD2.2 follows the requirements of LOD2.0 and LOD2.1, with the addition of roof superstructures (larger than 2 m and 2 m² ) to be acquired. This applies mostly to dormers, but also to other significantly sized roof structures such as very large chimney constructions. Because the roof is mapped in more detail, this LOD can be advantageous for the estimation of the insolation of roofs.

LOD2.3 requires explicitly modelled roof overhangs if they are longer than 0.2 m, therefore the roof edge and the footprints are always at their actual location, which has advantages for use cases that require the volume of the building.

[2],[10]

Prominent Neural Network Architectures

So, what could be the launching pad to explore potential solutions here? About a year ago we started by evaluating recent publications and associated implementations, most prominent of which were Differential Rendering[3], Occupancy Networks[4], and Mesh R-CNN[5].

Differential Rendering-based approaches, being actively popularized by both NVIDIA and Facebook AI teams, use 3D rendering as part of the training loop. A loss is calculated based on similarity between the ground-truth image and rendering of the mesh, which is being morphed, textured, and lit by the neural network with every iteration. While novel and conceivably a weakly supervised approach, training setups like these require significant compute and rendering resources.

Occupancy Networks are expected to consume less compute resources at training time, but returning results, once the network is trained, is more computationally demanding. In the inference mode, the model returns occupancy probabilities for an input point cloud, making the inference of an accurate object boundary an iterative process.

Mesh R-CNN, on the other hand, has a traditional convolutional backbone (ResNet50[6]+FPN[7]) with three to four additional heads (for our story, the “Mesh” head, built with Graph convolutions, is of special interest). Therefore, the compute expectations here, for training time, are relatively low compared to Differential Rendering-based solutions — great for running quick turnaround experiments. And at the inference time, Mesh R-CNN is expected to be computationally closer to traditional convolutional architectures, faster than Occupancy Networks. The inference time for us was important as well, since we were looking for a practical solution, which could potentially grow into a product or a service later.

Bld3d Neural Network

Given the considerations above, we decided to build our prototype on top of the PyTorch Mesh R-CNN implementation kindly shared by the Facebook AI team.

But there were a few important modifications that we needed to make in the original code and architecture before we could start training:

- Original Mesh R-CNN code was designed to work with images taken by a perspective camera, whereas our input rasters were orthorectified, i.e. translations from world space to normalized device coordinates (NDC) to camera space and back, had to be replaced with orthographic camera projections.

- Each mesh refinement stage uses hyperbolic tangent as an activation function to predict vertex offsets, which sometimes causes numeric stability issues when predicting offsets for large objects like buildings since Mesh R-CNN calculates losses in the world space. To address this issue, we modified the loss calculation logic to work off the NDC space, which is confined to [-1..+1] cube.

- Mesh R-CNN uses voxel head to predict a coarse-grained set of occupied voxels based on FPN outputs. Next, these voxels are converted into a triangular mesh, which then gets morphed (vertex connectivity stays unchanged, only vertex positions are modified) into a final shape by a chain of refinement stages of the mesh head.

Since we were working with nDSM rasters as input, we replaced the trainable voxel head with a procedural extrusion code, which was producing initial meshes as shown in Fig. 2. This allowed us to speed up training experiments and drastically reduce memory requirements. (The voxel head uses cross-entropy to predict occupied voxels in 3D space resulting in O(n³) memory usage.) - We streamlined the code further by removing the mask head. The mask head of Mesh R-CNN plays the same role as in its ancestor Mask R-CNN[8] architecture and was designed to return a per-instance pixel occupancy mask — pixels occupied by an object in the given image.

For our use case, we already had building footprints.These days there are multiple ways of getting accurate building polygons from raw data: pretrained deep-learning models, statistical methods working off raw point clouds, footprint-regularization methods, etc. Again, this led to a significant speedup in training times. - Original Mesh R-CNN output is always a watertight mesh with an arbitrary height above the “ground”. For the purpose of our building extraction experiments, we modified the refinement stage code to keep initial ground vertices always at zero-ground allowing movements in the horizontal plane only. Also, to reduce the output size, our resulting meshes do not have “floor” faces.

- In our experiments with 0.5m nDSM rasters, we found that initial meshes of 2 vertices per meter and refinement stages with larger than original Mesh R-CNN settings work better:

GRAPH_CONV_DIM = 128

POOLER_RESOLUTION = 32 - In some experiments we used up to four mesh refinement stages instead of original three, training them sequentially, and gradually adding new stages once the validation loss and mesh quality stabilized. 1. Compared to our two-stage models, four-stage Bld3d models showed somewhat better reconstruction results in geographic regions where architectural styles were similar to those of the training set, but demonstrated less stability in geographies where the style was significantly different.

- We modified the normal consistency loss to penalize deviations only when below a certain threshold (15 grads) between neighboring face-normals, which reduced the noise on reconstructed planar surfaces.

- We used MSE of relative ground perimeter lengths as an additional component of the compound weighted loss.

Training Data

The second most (or maybe even the most) important aspect of Deep Learning is the data that will be used to train the neural network.

For our experiments, we decided to tap into the Zurich Open Data portal, which offers multiple high-quality datasets collected around the same time featuring Digital Terrain Model (DTM) and DSM rasters (0.5m resolution), visible spectrum satellite imagery (0.1m resolution), and most importantly, about 48,000 manually crafted LOD2.3 building models. We selected datasets from 2015.

Preparing the Data

To create training data, first we created an nDSM raster by subtracting a DTM from a DSM and zeroing all the pixels with a height value below 2.0m.

Next, by using Esri’s ArcGIS Pro a simple Python wrapper on top of Export Training Data for Deep Learning tool, we exported georeferenced nDSM chips containing individual buildings in the center with all the pixels beyond the current building’s boundaries zeroed out. In addition to the nDSM chips, mask rasters of the same resolution were exported: each mask chip represented a Boolean raster with a value of 0 representing ground and 1 representing a building. The original building mask geometries were calculated with the help of the Multipatch Footprint tool, effectively calculating building footprint polygons out of the original 3D building shells.

Exported Boolean masks helped to speed up the data augmentation at training time and allowed for better quality extrusions of initial meshes, specifically in cases when building’s nDSM pixels were below the 2m threshold due to data quality issues; e.g., excessively large DTM raster values.

With the help of CityEngine, we exported individual buildings as OBJ files along with the metadata needed to link the exported OBJs with their nDSM image-chip counterparts.

The Training / Test split was done manually by selecting representative architectural styles and environments (urban and rural), quantitatively ending up with 1,031 buildings in the Test set and 45,057 in the Training set. About 2,000 buildings were removed from the original pool because of either the underlying nDSM chip had an average height below 2m, or the building footprint area was less than 30m².

Training Bld3d Neural Network

Hardware

We conducted our experiments on multi-GPU nodes, including a p3dn.24xlarge instance for up to 1,000 epochs in some of the runs.

Loss

Specifically, the majority of the hyperparameter search was focused around weights of the compound loss of the mesh head. We found that a weighted sum of Chamfer, Edge, Normal, modified Normal Consistency, and MSE Perimeter losses produce the highest quality meshes.

Augmentation

We extended initial augmentation of Mesh R-CNN to include random rotations along the vertical axis, which significantly improved the convergence and F1-Score on the Test set.

Flex-batching

Input nDSM chips, given fixed 0.5m resolution, varied in size from ~12 to almost ~1,000 pixels along the longest side, depending on the size of the building. Therefore, relying on Detecton2’s ImageList container, which pads all the input images to the dimensions of the largest one in the minibatch, would significantly reduce the number of images going into forward pass on average.

To address this issue and make GPU utilization more efficient, we dynamically varied the minibatch size. Specifically, in collate_fn(), we controlled the payload size by keeping track of the largest dimensions of the image in the batch and the total size of tensors of initial meshes. As soon as we reached the empirically calculated memory limit, the assembly of the minibatch would terminate and the result would be passed into the training loop.

Mesh Quality Metrics and Tensorboard

We added Tensorboard hooks to keep track of the metrics below:

At training time for each refinement stage:

- Original (unweighted) Chamfer, Edge, Normal, Normal Consistency, MSE Perimeter losses.

- Total loss and its moving average.

- Some manually selected buildings from Training set, representative of typical architectural styles and zoning, were captured inside the training loop and logged for interactive visualizations in Tensorboard.

At validation time we kept track of losses of the final refinement stage as well as additional quality metrics:

- Absolute and relative normal consistencies.

- Precision, Recall, F1-Scores for point clouds sampled (50,000 points) from GT and predicted meshes at various thresholds: 0.1m, 0.25m, 0.5m.

- Test meshes from batch of worst F1-Score: both the metrics and interactive visualization as in Fig. 7.

Results and throughput

Zurich test sites

We trained a 4-stage Bld3d model to the following metrics on the Zurich test set:

- Absolute normal consistency: 0.88

- Normal consistency: 0.80

- F1@0.25m / 50,000 sampled points: 0.49

- F1@0.5m / 50,000 sampled points: 0.75

You can see a live web scene of the test reconstructions shown above at ArcGIS — Bld3d reconstructions. Zurich Test site #4.

LOD2.?

In our experiments we have observed that, depending on the weights of the compound loss, resulting meshes vary significantly in quality, extracted features, and spatial resolution.

Specifically, with chamfer_w=16.0, normal_w=0.1, edge_w=0.3, and normal_consistency_w=0.1, we have seen superstructure features being acquired by Bld3d models even out of a few input pixels. Below is an example of a smaller spire on the same cathedral captured based upon a ~1.5–2.0m² of input nDSM pixels — an LOD2.2-level capability:

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.io/2021/07/09/3d-buildings-from-imagery-with-ai/