Image Caption Generation by using CNN and RNN

Original Source Here

Defining the Model

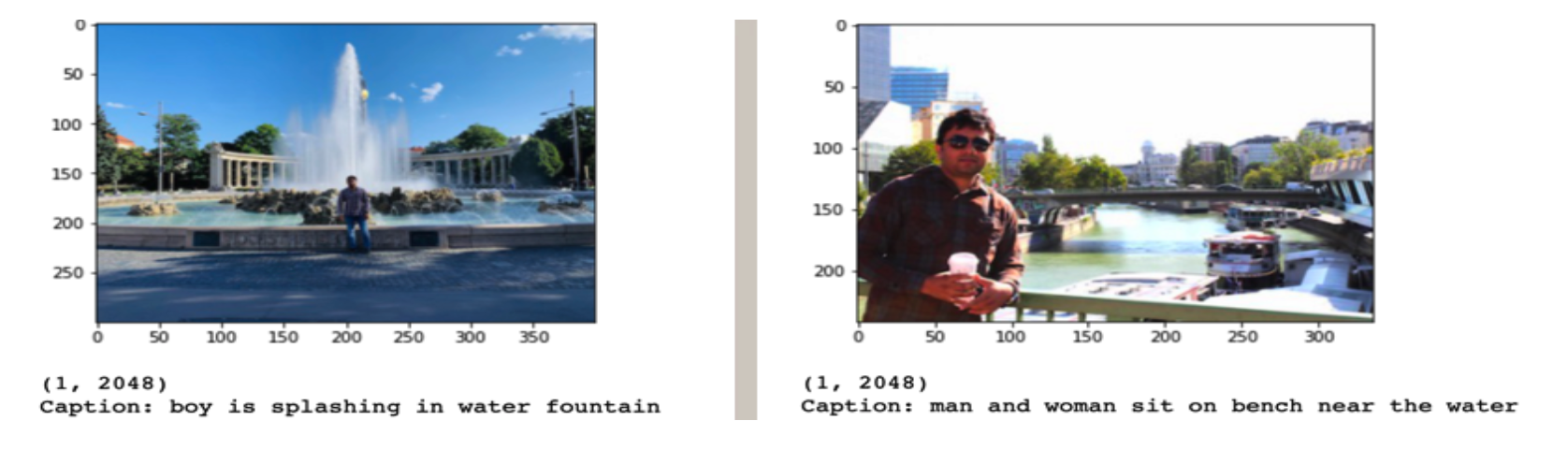

The model is an end-to-end neural network based on combining both CNN for image recognition followed by RNN text generation. It generates the text in Natural Language for an input image, as shown in the example.

We will describe the model in three parts:

Photo Feature Extractor. With the help of 16-layer VGG (CNN) model, we have pre-trained the Image Net dataset. This pre-processes the photos with the VGG model (without the output layer) and will use the extracted features predicted by this model as input.

Sequence Processor This is a word embedding layer for handling the text input, followed by a Long Short-Term Memory (LSTM) i.e recurrent neural network layer. This model is trained to predict each word of the sentence after the image is generated.

Decoder The feature extractor and sequence processor outputs a fixed-length vector. These are aligned with each other and processed by a dense layer to make a final prediction. In the end, an Image caption is generated.

The model Photo Function Extractor expects features of the input picture to be a vector of 4096 elements. These are processed via a dense layer to create a photographic representation of 256 elements. The model of the Sequence Processor requires input sequences with a predefined length (34 words) to be fed into an embedding layer using a mask to avoid padded values. The input models generate a vector of 256 elements as LSTM works with units of 256 memory units. To reduce overfitting in the training set both input models use 50% dropout regularization. The Decoder model uses an additional operation to merge the vectors from both input models. This is then fed into a Dense 256 layer of neurons and a final output Dense layer which allows a softmax prediction for the next word in the sequence over the entire output vocabulary.

Conclusions

- With the implementation of the algorithm, it can be realized that an end-to-end neural network system can automatically view an image and generates a responsible description in natural language. Image captioning based on the Convolution Neural network encodes an image into a representation followed by a recurrent neural network that generates corresponding text.

- It will be interesting to implement a generative adversarial network (GAN) for Images and text data to improve the performance of the model.

References

- Vinyals, Oriol, et al. “Show and tell: A Neural Image Caption Generator” , Proceedings of the IEEE conference on computer vision and pattern recognition. 2015, pp.3156–3164.

- Xu, Kelvin, et al. “Show, attend and tell: Neural image caption generation with visual attention”, International conference on machine learning, 2015, pp.1–6

- Reed, Scott, et al. “Generative Adversarial Text to Image Synthesis”, arXiv preprint arXiv:1605.05396 (2016), pp.1–10.

AI/ML

Trending AI/ML Article Identified & Digested via Granola by Ramsey Elbasheer; a Machine-Driven RSS Bot

via WordPress https://ramseyelbasheer.io/2021/04/16/image-caption-generation-by-using-cnn-and-rnn/